Data understanding part 1

In today's post we will focus on "data understanding", which is a crucial aspect of all data mining projects. Data understanding comes immediately after business understanding in the CRISP-DM methodology:

Per IBM SPSS Modeler Help, the data understanding phase of CRISP-DM involves taking a closer look at the data available for mining. It involves accessing the data and exploring it using tables and graphics. This enables you to determine the quality of the data and describe the results of these steps in the project documentation.

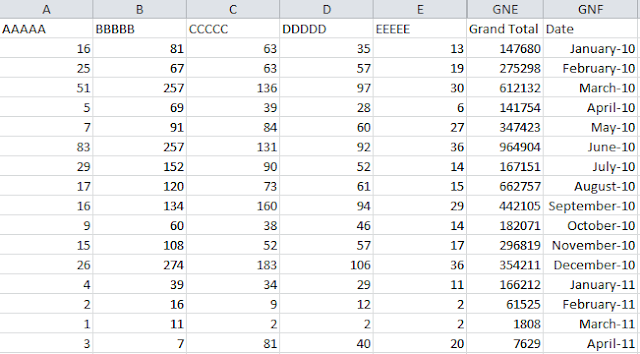

To get started, I used a csv file that was sent to me recently. I dragged a Var. File node onto the modeling canvas, attached the csv file to that node and then output the results into a table node. On reviewing the results, it was clear to me that csv format was not working as desired - the data was not coming through in the correct columns as in the source file. I then saved the source file as an Excel Workbook (2007, 2010) and repeated the process using an Excel source node as follows:

Upon "run"ning the table, we get the following results:

While most of the columns seem to have come through accurately, two new columns appear at the end of the table: C11 and C12. It appears that all values in these columns are "$null$". We will have to explore these further.

Since there are more than 131,000 lines of data, in order to speed up model execution, I have enabled caching on the source node by right clicking on the node and choosing Cache --> Enable.

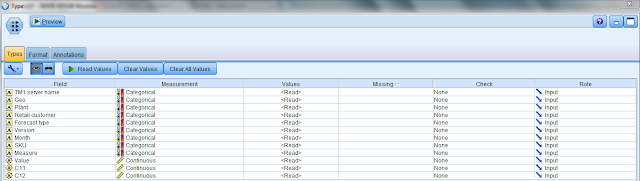

The next step in data understanding is to add a Type node to the Excel source node as follows:

The main purpose of the Type node is to determine the Measurement type and the Role type for each field in the data source. The type node with default values is as follows:

Let us now go through each of the fields and determine the appropriate measurement and role types for each field:

1) TM1 server name: This field represents the name of the TM1 server from which this data has been sourced. While not relevant for the purpose of developing the predictive model, it is still worthwhile going through the exercise of determining the measurement type for this field.

This field contains alphanumeric data so the relevant measurement types are categorical, nominal or typeless. The categorical measurement type is used for string values when an exact number of distinct values is unknown. Since we know the exact number of TM1 servers, the categorical measurement type can be discarded. The nominal measurement type is used to describe data with multiple distinct values, each treated as a member of a set. This measurement type could apply to the TM1 server name. The third measurement type that we should consider is Typeless. The typeless measurement type is used for data that does not conform to any of the above types, for fields with a single value, or for nominal data where the set has more members than the defined maximum (default maximum size for sets is 250 unique values). It is also useful for cases in which the measurement level would otherwise be a set with many members (such as an account number).

Based on the above discussion, we conclude the ideal measurement type for this field is "typeless". When you select Typeless for a field, the role is automatically set to none.

Based on the above discussion, we conclude the ideal measurement type for this field is "typeless". When you select Typeless for a field, the role is automatically set to none.

2) Geo: This field represents the geography of the units sold. Data in this field is also stored as a string. Therefore, once again, the measurement type can be categorical, nominal or typeless. Based on the discussion above, the most appropriate measurement type for this field is "nominal". The role for this field will be set as "input".

3) Plant: This field represents the plant from which the unit has been shipped. Data in this field is also stored as a string. Therefore, once again, the measurement type can be categorical, nominal or typeless. Based on the discussion above, the most appropriate measurement type for this field is "nominal". The role for this field will be set as "input".

4) Retail customer: This field represents the ID of the customer that the unit has been sold to. There appear to be issues with the data in this field since there are several records where no customer number is available. We will have to address this separately. Where records are available, they are in integer form. Therefore, the relevant measurement types could be continuous, flag, nominal or ordinal. Continuous measurement type is used to describe numeric values. Flag is used for data with two distinct values that indicate the presence or absence of a trait, such as true and false, Yes and No or 0 and 1. This is not applicable here. The ordinal measurement type is used to describe data with multiple distinct values that have an inherent order. For example, salary categories or satisfaction rankings can be typed as ordinal data. Since there does not appear to be an inherent order between customer IDs, we reject the ordinal measurement type. By elimination we determine that the relevant measurement type for this field is either continuous or nominal. The role for this field will be set as "input".

5) Forecast type: This field indicates whether the units relate to actuals, budgets or forecasts. Since we only have information regarding actual unit sales, this field is irrelevant to our analysis. As with the TM1 server name field above, we conclude the ideal measurement type for this field is "typeless". The role for this field is automatically set to none.

6) Version: This field identifies that the data provided relates to the "actuals" version. Since we only have information regarding actual unit sales, this field is irrelevant to our analysis. As with the TM1 server name field above, we conclude the ideal measurement type for this field is "typeless". The role for this field is automatically set to none.

7) Month: This field identifies the year and month in which the units were sold. This field should have been stored in the date format as follows: yy-mmm (based on our analysis of the csv source file). However, upon conversion of the csv file to excel (2007, 2010), the format does not appear to be getting picked up correctly. We will have to address this separately. For now, since this data is stored in the date format, the measurement type will have to be continuous (only applicable measurement type for a date field) and the role of the field will be set to input.

8) SKU: This field identifies the SKU or stock keeping unit that is being sold. This field is alphanumeric in nature so once again the measurement type can be categorical, nominal or typeless. However, since the exact number of distinct values is unknown, we use the categorical measurement type for this field and assign it an input role.

9) Measure: This field indicates the measure in which the units sold are displayed. Since all sales are reflected only in units, this field is irrelevant to our analysis. As with the TM1 server name field above, we conclude the ideal measurement type for this field is "typeless". The role for this field is automatically set to none.

10) Value: This field represents the actual number of units sold. The data in this field appears to be stored in real number form since some values have up to 3 decimals. However, on closer examination, it appears that all the decimal values are zeros and therefore can be ignored. Therefore, the data is stored in the form of integers. The relevant measurement types could be continuous, flag, nominal or ordinal. Continuous measurement type is used to describe numeric values and hence is applicable here. The role for this field will be set as "target" since this is the field that we would like to predict using our model.

3) Plant: This field represents the plant from which the unit has been shipped. Data in this field is also stored as a string. Therefore, once again, the measurement type can be categorical, nominal or typeless. Based on the discussion above, the most appropriate measurement type for this field is "nominal". The role for this field will be set as "input".

4) Retail customer: This field represents the ID of the customer that the unit has been sold to. There appear to be issues with the data in this field since there are several records where no customer number is available. We will have to address this separately. Where records are available, they are in integer form. Therefore, the relevant measurement types could be continuous, flag, nominal or ordinal. Continuous measurement type is used to describe numeric values. Flag is used for data with two distinct values that indicate the presence or absence of a trait, such as true and false, Yes and No or 0 and 1. This is not applicable here. The ordinal measurement type is used to describe data with multiple distinct values that have an inherent order. For example, salary categories or satisfaction rankings can be typed as ordinal data. Since there does not appear to be an inherent order between customer IDs, we reject the ordinal measurement type. By elimination we determine that the relevant measurement type for this field is either continuous or nominal. The role for this field will be set as "input".

5) Forecast type: This field indicates whether the units relate to actuals, budgets or forecasts. Since we only have information regarding actual unit sales, this field is irrelevant to our analysis. As with the TM1 server name field above, we conclude the ideal measurement type for this field is "typeless". The role for this field is automatically set to none.

6) Version: This field identifies that the data provided relates to the "actuals" version. Since we only have information regarding actual unit sales, this field is irrelevant to our analysis. As with the TM1 server name field above, we conclude the ideal measurement type for this field is "typeless". The role for this field is automatically set to none.

7) Month: This field identifies the year and month in which the units were sold. This field should have been stored in the date format as follows: yy-mmm (based on our analysis of the csv source file). However, upon conversion of the csv file to excel (2007, 2010), the format does not appear to be getting picked up correctly. We will have to address this separately. For now, since this data is stored in the date format, the measurement type will have to be continuous (only applicable measurement type for a date field) and the role of the field will be set to input.

8) SKU: This field identifies the SKU or stock keeping unit that is being sold. This field is alphanumeric in nature so once again the measurement type can be categorical, nominal or typeless. However, since the exact number of distinct values is unknown, we use the categorical measurement type for this field and assign it an input role.

9) Measure: This field indicates the measure in which the units sold are displayed. Since all sales are reflected only in units, this field is irrelevant to our analysis. As with the TM1 server name field above, we conclude the ideal measurement type for this field is "typeless". The role for this field is automatically set to none.

10) Value: This field represents the actual number of units sold. The data in this field appears to be stored in real number form since some values have up to 3 decimals. However, on closer examination, it appears that all the decimal values are zeros and therefore can be ignored. Therefore, the data is stored in the form of integers. The relevant measurement types could be continuous, flag, nominal or ordinal. Continuous measurement type is used to describe numeric values and hence is applicable here. The role for this field will be set as "target" since this is the field that we would like to predict using our model.

11) and 12) C11 and C12: As indicated above, two new columns appear at the end of the table: C11 and C12. It appears that all values in these columns are "$null$". These will need to be explored further. For now, we will treat these as continuous and set the role to input.

Based on the decisions made above, the type node appears as follows:

Retail customer, month, C11 and C12 fields have unresolved issues that we will need to explore further.

Based on the decisions made above, the type node appears as follows:

Retail customer, month, C11 and C12 fields have unresolved issues that we will need to explore further.

In order to do this, we attach a data audit node to the type node and run the node. We obtain the following results:

While this does not reveal anything new about retail customer and month, it shows us that the Value field is 99.97% complete with the balance data coming through in C11 and C12. Since only a negligible number of records show up in C11 and C12, we can ignore these fields for the purpose of our analysis. In order to do this, we attach a filter node to the type node and cancel out C11 and C12 as follows:

We then attach a Distribution node to the filter node and run the node on the Retail customer field. The output shows us that approximately 50% of the values do not have a customer number and are classified as AMER - no customer (15%), APAC - no customer (18%) and EMEA - no customer (16%) as is seen below:

In order to fix this issue, we could either use the Filler node or go to the source file and make the following changes. Replace:

* AMER - No Customer with 777777

* APAC - No Customer with 888888

* EMEA - No Customer with 999999

While we are in the source file, we also change the format of the date field to mm/dd/yyyy. We now re-import the source file into the modeling canvas and see if the quality of our data has improved:

And voila! The data looks a lot better now. The only issues that remain are columns C11 and C12 which are negligible and can be excluded from further analysis.

This Blog is very useful and informative.

ReplyDeletedata science course chennai