Feature selection for efficient modeling

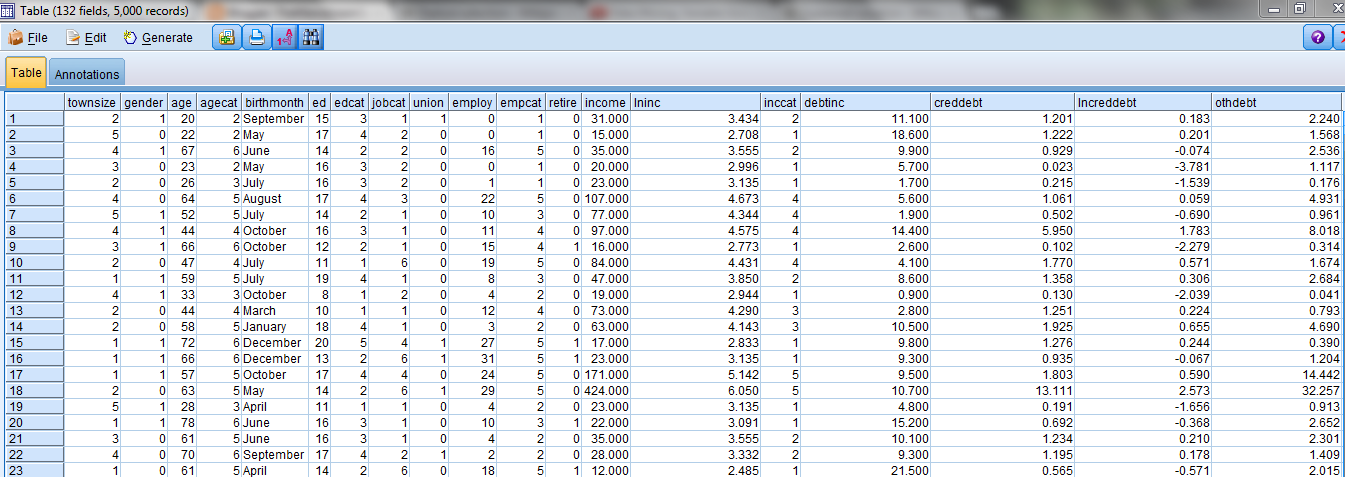

Feature selection, also known as variable selection, feature reduction, attribute selection or variable subset selection is the technique of selecting a subset of relevant features for building robust learning models (Source: Wikipedia). Data mining problems may involve hundreds, or even thousands, of variables that can potentially be used as inputs. As a result, a great deal of time and effort may be spent examining which variables to include in the model. Feature selection allows us to identify the most important variables to be used in the modeling process which can lead to the following benefits: Speed up model building : by using only the most important variables in model building, feature selection enables us to significantly reduce processing times thereby speeding up model building. The greater the number of potential "input" variables, the greater is the improvement in model building speed by using feature selection. Reduction in time and cost...