Why neural net models are great at making predictions when the exact nature of the relationship between inputs and the output is not known

In today's post, we explore the use of data mining algorithms in creating a SKU (Stock Keeping Unit) level sales forecast. There are several ways of creating a sales forecast including time series forecasting, simulation and scenario building. Ignoring these methods, we will instead use a neural net model to create a SKU level sales forecast. We will then test the accuracy of the forecast against actual data and try to explain why neural net models are great at at making predictions when the exact nature of the relationship between inputs and the output is not known. This is important because in developing our model, in addition to historical sales data, we will use data about customer demographics as well as unemployment and inflation data. At the outset, while high level conclusions can be drawn about the impact that demographics and other data can have on the sales forecast, the exact nature of the relationship between these inputs (historical sales data, demographics, inflation, unemployment) and the output (the sales forecast) is not known.

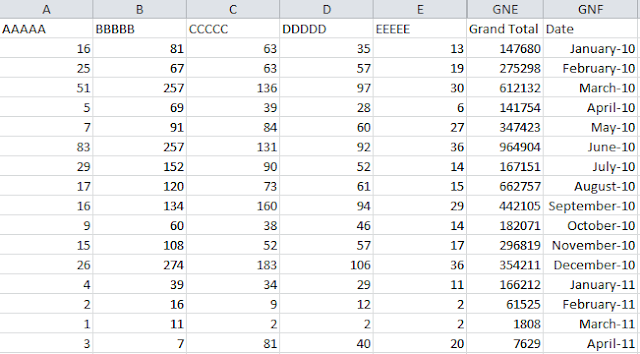

We begin by importing a Var. File source node into the SPSS modeling canvas and import the csv file containing historical sales data. The file contains data about weekly sales of a number of SKUs for four years (2007 through 2010). We then create a SuperNode to prepare the data for modeling as follows:

The SuperNode consists of the following nodes (in order, from left to right):

3) Derive Nodes #1 through #3: these derive nodes are used to create lag variables (prior year sales variables for the years 2008, 2009 and 2010). The function used in derive node #1 is provided below as an example:

4) Select Node #2: this select node is used to discard all data prior to 2010 since 2010 represents current year and the previous derive nodes have created lag fields for 2007, 2008 and 2009:

The data is now ready to be used in our model. We then use the Inner Join command in the merge nodes to first merge the demographics data and then merge the data on unemployment and inflation.

In the next step, we insert a Type node where we designate Unit Sales as the "Target" field, designate irrelevant fields as "None" and designate all other fields as "Input" fields. Finally, we partition the data as follows:

We then attach a Neural Net node and run the prepared data through the Neural Net algorithm to generate our forecast model. The final modeling canvas appears as follows:

On browsing the neural net model, we observe the following results:

We observe that the model is 99.7% accurate. The neural net model used is Multilayer Perceptron (more on that later).

The predictor importance graph shows that the most important predictors in determining the forecast are unit sales for the two years prior to the year that we are trying to forecast.

The chart above plots the actual unit sales data against the predicted values of the data to visually display the accuracy of the predictive model.

From the above, it seems clear that the neural net model is great at making predictions when the exact nature of the relationship between inputs and the output is not well known. Why is this the case? In order to understand this better, will will attempt to demystify the neural net data mining algorithm.

A neural network is a simplified model of the way the human brain processes information. It works by simulating a large number of interconnected processing units that resemble abstract versions of neurons. The processing units are arranged in layers. There are typically three parts in a neural network: an input layer, with units representing the input fields; one or more hidden layers; and an output layer, with a unit or units representing the target field(s). The units are connected with varying connection strengths (or weights). Input data are presented to the first layer, and values are propagated from each neuron to every neuron in the next layer. Eventually, a result is delivered from the output layer. (Source: IBM SPSS Modeler Help)

The network learns by examining individual records, generating a prediction for each record, and making adjustments to the weights whenever it makes an incorrect prediction. This process is repeated many times, and the network continues to improve its predictions until one or more of the stopping criteria have been met. Initially, all weights are random, and the answers that come out of the net are probably nonsensical. The network learns through training. Examples for which the output is known are repeatedly presented to the network, and the answers it gives are compared to the known outcomes. Information from this comparison is passed back through the network, gradually changing the weights. As training progresses, the network becomes increasingly accurate in replicating the known outcomes. Once trained, the network can be applied to future cases where the outcome is unknown. (Source: IBM SPSS Modeler Help)

This type of learning is called Supervised Learning. In Supervised Learning, the attempt is to infer the underlying relationships in the data that are provided to us. One of the methods of Supervised Learning is the Multilayer Perceptron which was used by our neural net model. The multilayer perceptron method learns using a technique called Backpropagation.

The Backpropagation learning algorithm consists of two phases:

We begin by importing a Var. File source node into the SPSS modeling canvas and import the csv file containing historical sales data. The file contains data about weekly sales of a number of SKUs for four years (2007 through 2010). We then create a SuperNode to prepare the data for modeling as follows:

The SuperNode consists of the following nodes (in order, from left to right):

1) Select Node #1: this select node is used to select SKU # 1001.

2) Sort Node: this sort node is used to sort the data in ascending order by customer location, year and by week number. This is done to better organize the data.

4) Select Node #2: this select node is used to discard all data prior to 2010 since 2010 represents current year and the previous derive nodes have created lag fields for 2007, 2008 and 2009:

The data is now ready to be used in our model. We then use the Inner Join command in the merge nodes to first merge the demographics data and then merge the data on unemployment and inflation.

In the next step, we insert a Type node where we designate Unit Sales as the "Target" field, designate irrelevant fields as "None" and designate all other fields as "Input" fields. Finally, we partition the data as follows:

We then attach a Neural Net node and run the prepared data through the Neural Net algorithm to generate our forecast model. The final modeling canvas appears as follows:

On browsing the neural net model, we observe the following results:

We observe that the model is 99.7% accurate. The neural net model used is Multilayer Perceptron (more on that later).

The predictor importance graph shows that the most important predictors in determining the forecast are unit sales for the two years prior to the year that we are trying to forecast.

The chart above plots the actual unit sales data against the predicted values of the data to visually display the accuracy of the predictive model.

From the above, it seems clear that the neural net model is great at making predictions when the exact nature of the relationship between inputs and the output is not well known. Why is this the case? In order to understand this better, will will attempt to demystify the neural net data mining algorithm.

A neural network is a simplified model of the way the human brain processes information. It works by simulating a large number of interconnected processing units that resemble abstract versions of neurons. The processing units are arranged in layers. There are typically three parts in a neural network: an input layer, with units representing the input fields; one or more hidden layers; and an output layer, with a unit or units representing the target field(s). The units are connected with varying connection strengths (or weights). Input data are presented to the first layer, and values are propagated from each neuron to every neuron in the next layer. Eventually, a result is delivered from the output layer. (Source: IBM SPSS Modeler Help)

The network learns by examining individual records, generating a prediction for each record, and making adjustments to the weights whenever it makes an incorrect prediction. This process is repeated many times, and the network continues to improve its predictions until one or more of the stopping criteria have been met. Initially, all weights are random, and the answers that come out of the net are probably nonsensical. The network learns through training. Examples for which the output is known are repeatedly presented to the network, and the answers it gives are compared to the known outcomes. Information from this comparison is passed back through the network, gradually changing the weights. As training progresses, the network becomes increasingly accurate in replicating the known outcomes. Once trained, the network can be applied to future cases where the outcome is unknown. (Source: IBM SPSS Modeler Help)

This type of learning is called Supervised Learning. In Supervised Learning, the attempt is to infer the underlying relationships in the data that are provided to us. One of the methods of Supervised Learning is the Multilayer Perceptron which was used by our neural net model. The multilayer perceptron method learns using a technique called Backpropagation.

The Backpropagation learning algorithm consists of two phases:

1) Propagation: in this phase the inputs are propagated forward through the neural network to produce the outputs. These are then propagated back towards the input to determine the hidden layer and generate the deltas between the actual outputs and the generated outputs.

This process continues until the performance of the neural network resembles the actual data as closely as possible. This is why neural net models are great at making predictions when the exact nature of the relationship between inputs and the output is not known. As data passes through the neural network, the quality of the relationships between inputs and the output continue to improve until they "mimic" the actual underlying relationship between them.

Hi Venky ,

ReplyDeleteGreat post ... its off great help for someone like me who is new to SPSS ...

Thanks S@j.

ReplyDelete